Earlier this year, I reviewed a report on AI ethics, published by the World Economic Forum, written mainly by Deloitte employees, that I dismissed as not helpful. But Deloitte's current report, quite the contrary, was well-written- and full of good advice instead of the usual platitudes (see Beyond Good Intentions: Navigating the Ethical Dilemmas Facing the Technology Industry, published by Deloitte).

I had the chance to discuss the paper with the lead author, Paul Silverglate. Here's my review of the paper, with that conversation in mind.

The report begins with a clear set of objectives:

That's ambitious proposition for a report. One of the report's first assertions was:

This is a bold statement. While it is true that technology, particularly AI, did not create quantitative methods of bias and discrimination, many have failed even to begin to address the issue. Social media is a primary culprit. Data brokers need to face more stringent regulations too. But there are other insidious examples such as video games, market researchers, and podcasts.

The paper proposes five dilemmas:

On data usage, Deloitte's report calls out data breaches, and transgressions with consumer data:

Universal standards are unlikely. In the US, the protection of people from the private sector has virtually no legal standing, with a few notable exceptions. Because the US is a country of 50+ jurisdictions, the standards for data will range from strict to non-existent. Internationally, there are already havens for unscrupulous data brokers to harbor their data with little to no scrutiny.

There also seems to be a subscript in the above comment that technology companies want to comply. For every Apple or Microsoft, thousands of technology companies will only act when forced to. Microsoft's facial recognition software and Apple's Federated Learning raise issues too.

On environment sustainability, the authors raise these issues: "Energy use, supply chains that could be more efficient, manufacturing waste, and water use in semiconductor fabrication." The current supercomputers require 40-50MW to run, enough to run a small city. How much fossil fuel does it take to generate and transmit that much power 24 hours a day?

To me, the most significant sustainability issue is sourcing the rare earth material used as components in high technology devices, including smartphones, digital cameras, computer hard disks, fluorescent and light-emitting-diode (LED) lights, flat-screen televisions, computer monitors, and electronic displays. Large quantities of some rear earths are also used in clean energy and defense technologies. Currently, China controls 87% of the market, and grave concerns have been raised about the toxicity of the production.

On threats to truth, the authors warn:

The statement is undeniable. My upcoming article for diginomica on AI and disinformation will delve further. On the difficult issue of AI explainability, the authors write:

Explainability is a fashionable term, but it’s not useful. What developers of algorithms need is to understand the model. Explainability only provides what the model does in the model’s terms.

The category "Trustworthy AI" raises some issues for me. Trustworthy is not necessarily ethical. Trust is based on transparency, reputation, and sometimes, unfortunately, blind faith in wholly untrustworthy characters. It is not a consummate good. Putting trust at the center implies ethics are of secondary concern.

One prominent example of ethical-but-not-trustworthy is the use of machine learning in radiology. After some early gaffes when Stanford Medical's radiation oncology model produced noticeable results between different ethnic groups, they went back to the drawing board. They developed a system that identified tumors that most of a panel of radiologists did not, and the degree of false-positive and negatives was evenly distributed across groups. They'd developed an ethical system, cleansed of bias, but trust was a different issue.

Trust is about what or who should be trusted or how to create trust, whether or not it's ethical. Though they are commingled in specific ways, pursuing trust to the exclusion of ethics is dangerous.

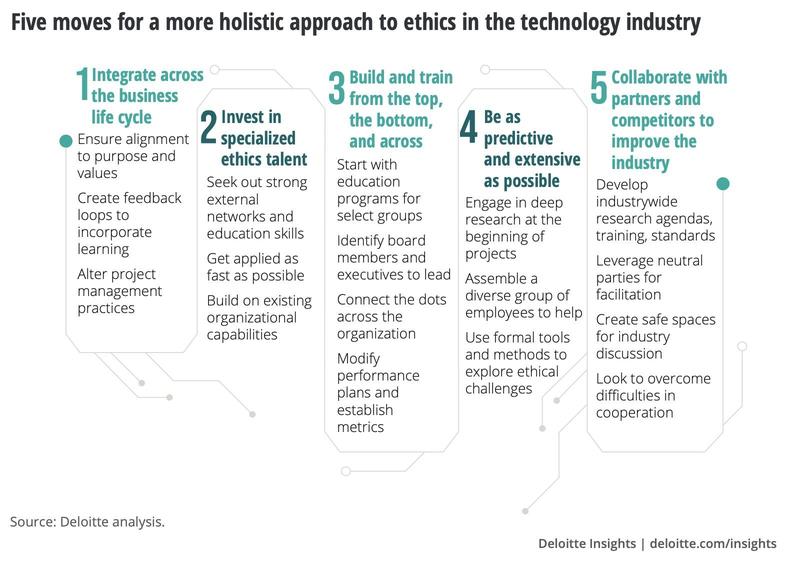

"Five key moves to help build clarity and capacity"

Instead of simply stating the problems, the paper goes on to suggest some solutions.

Here's my review of each one:

My review: ho-hum.

My review: None of these things requires deep psychological manipulations on the part of management. And the whole narrative gives me a creepy feeling that implies a paternalistic approach - those above you engage in activities to make you ethical. I think it's the other way around. I know it. This sort of thinking is endemic among "strategy" consultants who only see the C-suite perspective.

My review: This seems to imply the previously attempted and only marginally successful attempts to educate the whole organization in ethics. There is no evidence that this has the desired effect. More than a course on deontological vs. consequential thinking, people need an organizational sense of how these ideas apply to their work. Remember, ethics is discursive. It's not rules.

My review: These suggestions are not likely to be initiated in organizations, at least not for very long.

My review: These are competitive, secretive organizations. Any collaboration would be superficial.

My take

There is a lot of useful advice in this paper, even though I disagree with some of it as noted. I enjoyed my cameration with Silverglate, who was open to some give-and-take, and well-informed on the subject.